Friday, March 31, 2006

Congress and better financial reporting

The Financial Services Subcommittee on Capital Markets, Insurance and Government Sponsored Enterprises, chaired by Rep. Richard H. Baker (LA), will convene for a hearing entitled Fostering Accuracy and Transparency in Financial Reporting. The hearing will take place on Wednesday, March 29 at 10 a.m. in room 2128 of the Rayburn building.

Members of the Subcommittee are expected to discuss ways to promote more transparent financial reporting, including current initiatives by regulators and industry.

For the capital markets to operate most efficiently, information about public companies must be understandable, accessible, and accurate. Corporate statements are mathematical summaries meant to convey a company's condition. The four basic documents which must be filed with the U.S. Securities and Exchange Commission (SEC) are at the heart of investor disclosure: the income statement, the cash flow statement, the balance sheet, and the statement of changes in equity.

Among the current initiatives to improve the clarity and usefulness of public company information is a trend away from quarterly earnings forecasting, the use of technology to decrease complexity, and a review of the various accounting standards and how they interact.

Subcommittee Chairman Baker said, "If U.S. markets are to remain on top in an increasingly competitive global marketplace, we need to move away from the complex and cumbersome and explore technological and other methods of enhancing the clarity, accuracy, and efficiency of our accounting system. At the same time, we need to look at whether earnings forecasting and the beat-the-street mentality, which appears to have contributed to some of the executive malfeasance of the past several years, truly serves the best interest of investors or the goal of long-term economic growth."

The corporate scandals several years ago revealed weaknesses in the financial reporting system. While many companies were violating financial reporting requirements, regulatory complexity also may have contributed to some lapses in compliance.

Fraud, general manipulation of statements, and regulatory complexity all contribute to a reduction in the usefulness of financial statements and all may obfuscate the picture of companies' financial health. A number of recent studies have argued against the practice of predicting future quarterly earnings, concluding that the drive to "make the numbers"

can lead to poor business decisions and the manipulation of earnings.

Congress, regulators, and the industry subsequently have assessed financial reporting failures and have reacted with efforts aimed at strengthening the system, including many provisions of The Sarbanes-Oxley Act of 2002.

More recent initiatives by regulators to streamline financial reporting standards and accounting include:

* A Financial Accounting Standards Board (FASB) review of complex and

outdated accounting standards;

* The use of principles-based, rather than rules-based, accounting;

* FASB's continued cooperation with the International Accounting Standards Board on the convergence of accounting standards; and

* The use of eXtensible Business Reporting Language, or XBRL, a computer code which tags data in financial statements. The use of XBRL allows investors to quickly download financial data onto spreadsheets for analysis.

Public Companies have been filing financial statements with the SEC since the passage of the Securities Exchange Act of 1934.

Scheduled to testify:

Panel I

Willis Gradison, Acting Chairman, Public Company Accounting Oversight Board

Robert H. Herz, Chairman, Financial Accounting Standards Board

Scott Taub, Acting Chief Accountant, Securities and Exchange Commission

Panel II

David Hirschmann, Senior Vice President, U.S. Chamber of Commerce

Marc E. Lackritz, President, Securities Industry Association

Colleen Cunningham, President, Financial Executives International

Barry Melancon, President, The American Institute of Certified Public Accountants

Rebecca McEnally, Director of Capital Markets Policy, Center for Financial Markets Integrity, CFA Institute

Tuesday, March 28, 2006

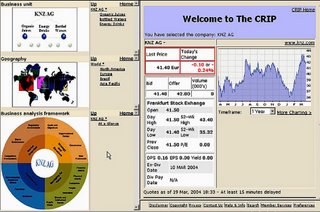

Company DashBoard

Spring is here . .and, here I am in (snowy!) Minneapolis to present XBRL to an investor relations audience with Dan Roberts, Chair XBRL US. Dan's day job is Director, Assurance Innovation (hmm oxymoran if ever I saw one!) at Grant Thornton. Dan led me to appreciate that we must strive to create new ways of challenging ourselves and our children and to that point imparted his love and mastery of the unicycle. Dan is a very engaging speaker and always warms the audience with his personal experiences in life. Also joining us in our lively discussion was Garry Lowenthal for Viper Powersports Inc. (Pinksheets: VPWS). Garry is a very affable guy and has over twenty years of senior operations & finance experience, having served as a CEO, COO, and CFO, with a record of facilitating acquisitions, business launches, IPO’s, reorganizations and turnarounds while driving rapid revenue production. BTW, if you ever want to check out of the rat race and join the life of extreme sports -- you must take a peep at Garry's company and his custom bikes.

Thursday, March 02, 2006

Pressing the right buttons

The Accountant: February 28, 2006

A multitude of software products and technological tools are on

offer for companies and firms to use as they seek to improve the flow of

information when it comes to crunching and analysing the numbers.

Catherine Woods reports on where a rapidly evolving market is heading

There is a sense among regulators and the audit profession that more

needs to be done to simplify financial reporting for the users and

preparers of accounts, and that technology will have a key role to play

in this process.

David Turner, group marketing director for software provider CODA,

says that when it comes to using technology, accountants have always

been reasonable. He adds: "[They] are also... slightly conservative and

you can't blame them for that. Auditors, I think, are probably lagging

further behind."

Phil Donarthy is business development manager within the

accountants' division for software company Sage. He says that he does

not find accountants reluctant to take up new technology, but he finds

that many are not as progressive as they could be. He notes that there

has not been a sudden shift whereby accountants have become more

receptive to new technologies than in the past. "I think we're at the

stage now where across all walks of life, people are more receptive to

technology and also, let's be honest, technology is getting better," he

says.

Slow uptake

A sluggishness to use new systems is illustrated by the results of

research commissioned by Sage. Nearly 500 accountants, 200 business

start-ups and 2,100 established businesses were polled for the Sage

Accountants Business Collaboration (ABC) survey.

Eighty-one percent of accountants who responded felt they could make

better use of technology to increase the effectiveness of services they

offered. Sixty-nine percent believed it could also cut the cost of

providing those services.

Turner says the development of the internet has had one of the

greatest impacts on the use of technology in the financial services

sector. "You've had a whole new generation of web-based reporting tools

that have since come out," he says.

This greater use of technology across the finance spectrum, he adds,

is being driven less by clients and investors and more by the general

push for efficiency. Turner notes: "Number crunchers want to spend less

time creating the numbers and more time analysing them."

However, Donarthy says that there is another side and this is when

accountants want better systems for their clients: "Many accountants are

still working with clients who bring them bags full of receipts, rather

than actually providing them with any form of formatted data so a lot of

accountants are saying: 'If only my clients could be more efficient, I

could be more efficient and provide them with real insight into their

business.'"

Providing better business insight for the clients, notes Donarthy,

is also better for a firm's bottom line as it means accountants can

"concentrate their efforts on the higher value stuff [and] they can bill

for more". The benefits of technology can be in terms of greater

transparency, which suits today's environment in which resources are

constrained and yet the demands from regulators and stakeholders are

high.

Firms are progressive

Helen Nixseaman, partner in risk assurance services at

PricewaterhouseCoopers UK (PwC), and Steve Maslin, head of assurance

services for Grant Thornton UK, stress that the firms are progressive

when it comes to the use of technology.

Maslin says it is something that the accounting network, Grant

Thornton International, recognised in the early 1990s after member firms

predicted growing commercial and regulatory pressure for there to be

greater consistency. That led to the development of a common audit

methodology. He says: "Certainly, over the past few years... that's

given us huge commercial advantages and enabled us to deal as

efficiently as we can with regulatory demands."

In addition to this audit methodology, a lot of work at Grant

Thornton International has gone into developing software around internal

controls. The network now uses a software product which Maslin says

builds up a database of the sorts of internal controls one would expect

in different organisations. He notes: "That means whenever we're doing

an audit throughout the world, we've got a single methodology for going

around and testing the effectiveness of our clients' internal controls

system."

The software is linked to another product which enables Grant

Thornton International clients to capture their internal controls

electronically. Maslin says this is a format which allows a member firm

to carry out an audit procedure on controls without a client also

documenting it and causing unnecessary duplication.

Regulations and standards

Maslin observes that the latter system has been introduced to help

meet the requirements of the US Sarbanes-Oxley Act and the new

International Standards on Auditing which now require auditors to look

at the design effectiveness of a company's internal controls.

Internal control software, according to Turner, is a part of the

business that has grown rapidly in the US and is also picking up in

Europe. "Either because of Sarbanes-Oxley or because people in Europe

are seeing other legislation coming down the line, they're realising

that they're going to have to get their internal controls nailed down,"

he says.

Another way Grant Thornton International ensures that work being

done conforms to international regulations is through the use of

electronic audit files. Maslin says by working electronically "we can be

confident if we're doing multi-national audits that we're doing the work

to a single set of standards, but adding on to it the individual

standards of any one country". Audit files in the UK, US and Canadian

member firms became electronic around 1999.

PwC has also used electronic working papers for the firm's audit

files for a number of years. Nixseaman says: "It just makes sense in

terms of sharing information, particularly with teams spread around

different locations or even different countries."

The majority of this software at PwC and Grant Thornton has been

developed specifically for the firms. Maslin says Grant Thornton

International has employed a software team of 15 specialists in North

America for the last 15 years to develop and maintain its suite of

products.

It is, he adds, better at present to use an in-house system: "We've

found the software we've developed and maintained ourselves is certainly

a lot more robust and efficient than a lot of the software we've bought

from the commercial market."

An area where PwC is looking to further enhance its use of

technology is audit methodology. Nixseaman suspects that the firm will

move to make more use of the data analytic-type tools referred to by

Turner. These tools fall into two main areas: "One is in analysing

clients' data, so re-performing calculations or carrying out our own

analysis to look for trends or exceptions, and then producing some of

our own reports or graphs.

"The second area where we use it is to look at systems such as

financial systems or ERP [enterprise risk management] systems and to

look at how those have been configured and how segregation of duties has

been set up."

Quicker to use

Turner says these tools are being used by more people now that the

technology is quicker to implement and cheaper. He describes these tools

as occupying a third level of reporting analysis. The other forms are,

he says, rudimentary, online browsing-type and query-type reports which

people would perform within their accounting systems, and reporting

tools which entail taking something like Microsoft Excel spreadsheets

and turning them into more sophisticated reporting tools, or a purely

web-based product which allows accountants to quickly assess something

like profit and loss.

The tools on the third level of reporting analysis, Turner claims,

are what the market is going to want more of in the next three to five

years: "We've just gone through a number of years of focus on big ERP

applications. I think there's a reaction against that towards 'light

technology' - solutions you can implement fast, that will help you

automate your business and that are almost disposable so you can bring

them in, use them and then, if necessary, move on to the next

technology."

Maslin believes there is more the firm can do with regards to

internal control. He says the challenge for Grant Thornton will be to

move the current audit approach "from instead of just enabling it to

meet our regulatory and professional needs to working with clients to

make sure they're using the results of that audit work to actually

improve the efficiency and robustness of their own systems".

He identifies a new technology that electronically picks out the

parts of company reports that are most often examined as another tool

the firm is looking to harness. "It takes electronic financial

statements and every time an investor or analyst looks at the company

accounts, it builds up a profile of what sections of the accounts seem

to be of most interest. That is going to help both issuers and the audit

firms to better understand the needs of investors," Maslin notes.

A simple language

Simplifying financial reporting for all users of the information has

been driver behind eXtensible Business Reporting Language (XBRL),

especially in the US. Greater uptake in the UK is another area of

interest to Grant Thornton International. XBRL is an online system

that works by tagging data within financial information. The tags then

enable automated processing of business information by computer

software. XBRL can process data in different languages and accounting

standards.

In the US, one of the champions of XBRL is Securities and Exchange

Commission (SEC) chairman Christopher Cox who, since taking over from

William Donaldson last year, has consistently promoted the use of the

technology. The SEC is currently running an XBRL voluntary filing

programme which offers companies incentives to take part.

The XBRL project in the UK is not as advanced although Philip Allen,

director of XBRL UK - a consortium which advances the use of XBRL in the

UK - says there has been a huge increase in interest. Allen says the

"massive gain" to be made from the online application in the US is

different to the gains to be made in the UK.

"In the US, the issue is you have a huge number of listed companies

and no-one can really compare or analyse their accounts. Being able to

put it all [into] XBRL will allow people to simplify the analysis of

listed company accounts dramatically," says Allen.

In the UK, he suggests that the main benefit will be to improve a

company's access to credit. XBRL could allow banks to better process and

analyse the accounts they receive periodically from businesses to which

they have loaned money.

Allen comments: "If you have a large bank that actually could look

at a million corporate accounts and compare them all properly in real

time, it would revolutionise the way the bank provides credit. I think

that's probably going to have more of an impact on the UK economy than,

let's say, what listed companies do. They all list in the US anyway so

will be more affected by what the SEC is doing."

Companies House, the official government register of UK companies,

and HM Revenue & Customs (HMRC), are spearheading the British

government's involvement with XBRL. Allen says both have technically got

to the point where they have "solved all the problems relating to the

receipt of XBRL". Companies House now has a live service for receipt of

XBRL, trialling the system for companies which are exempt from audit.

Allen says Companies House and HMRC are "taking it very carefully

and very slowly because they don't want to put a foot wrong on this". He

adds that it is widely acknowledged that listed companies in the UK will

become more familiar with the software.

Accounting firms and software companies, claims Allen, are listening

carefully to the UK government before committing a large amount of

capital in this area: "What they understand is that in practical terms,

this is all going to be driven by government saying: 'This is how you do

your filings.' It's not that they're not interested, it's just that they

could lose a lot of money trying to go too fast on this."

As for when the government is likely to insist that companies must

use XBRL when filing accounts, Allen says: "Lord Carter [head of the

review of HMRC online services] has been undertaking a review of

corporate filing processes and it is expected that he will report at

some point on this. That report will drive how HMRC reacts." In the

meantime, XBRL UK is planning a conference in London during May for

accounting practices and software vendors about the Companies House and

HMRC projects.

Benefits

Just as the UK is keeping track of the US when it comes to interest

in internal controls software, the same trend is expected to happen with

XBRL. Allen believes that the Wall Street community is now starting to

understand what the investment analyst can do with XBRL and the same

equation will occur in the City of London soon, although he acknowledges

that "it is fair to say not many people there have quite got that far

yet". The possible credit benefits of the technology, he adds, are

unlikely to be realised until there is a larger take-up of the

production of XBRL accounts.

When it comes to technology which accountants use daily, the US has

less influence in the UK. Donarthy's theory is that simplifying the

technology he presents to accountants works best: "Accountants want to

do a good job. They're not at all interested in the technical details of

the solution. They're interested in how it can help them provide a

better service to their clients or make their practice as efficient as

possible. That's one thing we've learnt - we have to talk about the

benefits rather than getting hung up by how clever we are with our

latest whiz-bang feature."

Wednesday, March 01, 2006

Folksonomies v. taxonomy

folksonomies + controlled vocabularies

Posted by Clay Shirky

There’s a post by Louis Rosenfeld on the downsides of folksonomies, and speculation about what might happen if they are paired with controlled vocabularies.

Easy, but wrong: folksonomies are clearly compelling, supporting a serendipitous form of browsing that can be quite useful. But they don’t support searching and other types of browsing nearly as well as tags from controlled vocabularies applied by professionals. Folksonomies aren’t likely to organically arrive at preferred terms for concepts, or even evolve synonymous clusters. They’re highly unlikely to develop beyond flat lists and accrue the broader and narrower term relationships that we see in thesauri.

I also wonder how well Flickr, del.icio.us, and other folksonomy-dependent sites will scale as content volume gets out of hand.This is another one of those Wikipedia cases — the only thing Rosenfeld is saying that’s actually wrong is that ‘lack of development’ bit — del.icio.us is less than a year old and spawning novel work like crazy, so predicting that the thing has run out of steam when people are still freaking out about Flickr seems like a fatally premature prediction.

The bigger problem with Rosenfeld’s analysis is its TOTAL LACK OF ECONOMIC SENSE. We need a word for the class of comparisons that assumes that the status quo is cost-free, so that all new work, when it can be shown to have disadvantages to the status quo, is also assumed to be inferior to the status quo.

The advantage of folksonomies isn’t that they’re better than controlled vocabularies, it’s that they’re better than nothing, because controlled vocabularies are not extensible to the majority of cases where tagging is needed. Building, maintaining, and enforcing a controlled vocabulary is, relative to folksonomies, enormously expensive, both in the development time, and in the cost to the user, especailly the amateur user, in using the system.

Furthermore, users pollute controlled vocabularies, either because they misapply the words, or stretch them to uses the designers never imagined, or because the designers say “Oh, let’s throw in an ‘Other’ category, as a fail-safe” which then balloons so far out of control that most of what gets filed gets filed in the junk drawer. Usenet blew up in exactly this fashion, where the 7 top-level controlled categories were extended to include an 8th, the ‘alt.’ hierarchy, which exploded and came to dwarf the entire, sanctioned corpus of groups.

The cost of finding your way through 60K photos tagged ‘summer’, when you can use other latent characteristics like ‘who posted it?’ and ‘when did they post it?’, is nothing compared to the cost of trying to design a controlled vocabulary and then force users to apply it evenly and universally.

This is something the ‘well-designed metadata’ crowd has never understood — just because it’s better to have well-designed metadata along one axis does not mean that it is better along all axes, and the axis of cost, in particular, will trump any other advantage as it grows larger. And the cost of tagging large systems rigorously is crippling, so fantasies of using controlled metadata in environments like Flickr are really fantasies of users suddenly deciding to become disciples of information architecture.

This is exactly, eerily, as stupid as graphic designers thinking in the late 90s that all users would want professional but personalized designs for their websites, a fallacy I was calling “Self-actualization by font.” Then the weblog came along and showed us that most design questions agonized over by the pros are moot for most users.

Any comparison of the advantages of folksonomies vs. other, more rigorous forms of categorization that doesn’t consider the cost to create, maintain, use and enforce the added rigor will miss the actual factors affecting the spread of folksonomies. Where the internet is concerned, betting against ease of use, conceptual simplicity, and maximal user participation, has always been a bad idea.

Comments (12) + TrackBacks (0) | Category: social software

COMMENTS

1. Simon Willison on January 7, 2005 06:26 PM writes...

Further to your points about, I think a key element of folksonomies that is yet to be fully explored is ways of improving their support for "emergent" vocabularies.

Here's an example: I'm posting a picture of a squirrel on flickr; do I tag it with "squirrel" or "squirrels" for best effect? I can find out which term will be most effective by seeing how many pictures are already tagged with those two terms respectively, and going with the most popular.

At the moment that's a slightly tedious manual process, and one that many people are unlikely to bother with - but if the software offered a seamless interface for doing that (a Google Suggest style popup showing how many images are tagged with that tag as you type for example) people would be far more likely to form and follow a consensus.

I'm confident that there are a lot of things that can be done to improve the quality of folksonomy-produced metadata, without increasing the price (and rendering them useless).

Permalink to Comment2. Lou Rosenfeld on January 7, 2005 07:29 PM writes...

Clay, interesting comments, but you seem to have missed my point. True, I shared my concerns about folksonomies; I expect you'd agree that they're no panacaea. Nothing is. It'd be silly not to be skeptical about them at this early point in their development.

But I'm also quite skeptical about controlled vocabularies. I've probably read all the same studies you have--perhaps more--detailing their high cost. I spent four years in an LIS program and worked in libraries, so I have a little first-hand knowledge. Oddly, people who attend my IA seminar walk away with the sense that I'm against controlled vocabularies. So shoot, Clay, we actually agree on this point.

But how these two forms of metadata might work together is what's really exciting. (And that's why I used the holistic term "Metadata Ecologies" in my posting's title.) They may be quite complementary, which is wonderful, as salvation lies in neither. I hope we might begin brainstorming how they can work together.

We're not even bringing up how the nature of content, users, and context plays out in all this. Folksonomies might work fine for archives of photos. But I'd prefer that my doctor rely on professional indexing to do his research the next time I'm in urgent care with some strange condition. And I'm hopeful that down the road a medical folksonomy might somehow improve on the performance of MESH headings, thereby increasing my chances of survival.

In the meantime, is there anything else you'd like me to convey to the "‘well-designed metadata’ crowd" at our next meeting (every second Tuesday at the south entrance to Dewey's mausoleum; be there or be uncontrolled)?

Permalink to Comment3. Jay Fienberg on January 7, 2005 08:23 PM writes...

I'm glad you connected the folksonomy issue to the Wikipedia one, because I think they're similar stories in terms of the battles of loose vs controlled ways of doing things, and how folks who like one or the other tend to react to the other's approach.

But, I think this story of the loose vs controlled battles, however one would tag the two sides, is one that folks like Lou don't fit into so neatly, and that you over reacted to his points.

I think the implication is wrong that folks who practice information architecture automatically fall into some kind of controlled vocabulary metadata control freak category who opposed all wiki folksonomy tag flipsters.

Likewise, I think the implication is wrong that all ordinary folk are, by nature, free tag lovers who'd only desire controlled vocabularies if it got them out of a deal with the devil.

As Lou suggests, there is a whole interesting realm of possibilities wherein both of these approaches are combined and/or co-exist. Even Wikipedia has forms of control--loose vs control is co-existing there.

And, Flickr / del.icio.us have controls in terms of how one can change tags, once they are created--which are controlled vocabulary techniques that (maybe) could actually be removed, IMO, were those folks really committed to folksonomies!

Personally, the most interesting thing to me is creating ways to allow the one approach to evolve into the other, and vice versa, as IMHO, the "right" way is one that can evolve either way, dynamically (e.g., things can be under organized or over organized, and good organization is a dynamic balance between the two).

Permalink to Comment4. Dave Evans on January 7, 2005 09:38 PM writes...

I think some meta-data will be more controlled that others. Business environment stuff, like "bought by", "owned by", "works for", "funded by", which are the types of tags I'm using in my vizualisation system, are pretty easy to standardize. Tagging "squirrel" is probably good enough for most people without having to worry about plural forms, or black or red squirrels. I wonder if there is a way to self-organize tags against the most popular ones that emerge over time? Changing tags in one fell swoop like in Flickr might be a (scary) good thing, like upgrading software for new features.

5. Rick Thomas on January 7, 2005 09:53 PM writes...

This is a microcosm of the process of language formation. For matters of consensual reality language is fairly fixed. When there's something new to talk about language is fluid and then converges as the subject is understood. The resulting language will always vary by community - English vs. Russian, engineers vs. marketers - because they have different experiences. Bridging communities depends on multi-lingual people using clever tools.

This is also why it's easy for a million bloggers to write quick opinions, but relatively harder to synthesize collaborative works - there is an unavoidable cost of semantic reconciliation.

Evolution uses this algorithm to create life. Start with any found stability. Produce diversity. Choose better stability. Create highly conserved systems along the way.

Permalink to Comment6. Shannon Clark on January 8, 2005 12:16 AM writes...

It seems to me that there is another, very significent and high "cost" to controlled vocabularies - except in a very few cases, users have to learn (and/or navigate/use other tools) the vocabulary to use it, let alone use it effectively.

i.e. take an extreme example of a library shelving system - it is not at all trivial or obvious to most users (let alone professionals) where a given book "should" be shelved and I assume the process of integrating new/emergent categories is a decidedly non-trivial one. A library shelving system also shows one of the major flaws of many formal metadata systems for many users - they assume an either/or system - i.e. a book can only be in one place at a time, so it is either in one category or another, but not both (at least not physically).

Online there are countless cases when a user, very logically, wants something to be multiplely tagged - i.e. it is both a book business and a book on technology, it is a photo myself as well as a photo containing a monkey etc.

It is also useful to keep in mind why, where, how and for whom users apply metadata (in non-formal situations). Most of the time in most systems users apply none or very little metadata. It is only when doing so ads value very directly for the user that users generally speaking take the time to add metadata.

- blog posts might get metadata if someone wants to make it easier for they themselves to find their own posts. And/or if they have enough readers to assist those readers in finding related posts

- photos may get tagged if someone wants to make it easier for their friends to find specific photos, as well at times to open up photos (ala Flickr) to a wider audience, such as other attendees of the same event.

These fairly adhoc, mostly relatively limited in scope uses of metadata differ very widely from the more formal uses imagined by many people - such as the "Semantic Web" crowd etc. In those cases the assumption is that metadata (and extensive formal metadata at that) is to some degree inherently valuable and useful - but also that it will enable a new class of applications and uses.

I would argue that most of the time the cost of doing all of this tagging, especially the cost of learning the system for tagging (which is more than just learning the names of the tags - it is also learning how to pick and choose between tags, how to search for the "right" tag(s) etc) is vastly higher than most people (or their companies that pay for their time if done in a professional environment) are willing to incur.

Potentially some tools can be built to automate the process - to suggest tags, to apply many of them in a mostly painless and automated way - though all such systems have to guard against inaccurcy as well as the "other" category problem Clay highlights.

In short - an important topic for discussion and one where I pretty much agree with Clay.

Shannon

Permalink to Comment7. Bill Seitz on January 8, 2005 11:06 AM writes...

I wonder whether folksonomies will just turn into free-text search engines? That's the other extreme of the uncontrolled-vocabulary spectrum...

Permalink to Comment8. Bill Seitz on January 8, 2005 11:12 AM writes...

Specifying which *contexts* are being discussed seems awfully relevant for discussions like this.

The more coherent (non-diverse?) the "user" "community", the more easily a SharedLanguage can emergence and be maintained...

http://webseitz.fluxent.com/wiki/SharedLanguage

Check out this outlandishly clueless call for a "well-designed" web:

http://www.opendemocracy.net/debates/article-8-10-2277.jsp

Not only are all of Thompson's complaints completely wrong, they are the key drivers of the web's crazy success!

Permalink to Comment10. Edward Vielmetti on January 9, 2005 02:27 AM writes...

This discussion reminds me of the James Fallows NY Times piece on knowledge management where he distinguishes between the "big heap of laundry" approach (= folksonomy) and the "neatly folded PJs" (= taxonomy) approach to handling volumes of information.

Given how much attention people pay to presentation when it comes to materials that they expect to have a big impact or a long lifetime, I can only expect that we'll continue to see both systems in place, sometimes in parallel, as long as there are exclusive categories (Michelin 4-star restaurants) where common-folks opinions aren't the point.

What if the descriptive taxonomy (what this thing is) was open-ended (a folksonomy), but the functional taxonomy (what would you do with this thing) was controlled?

So, say I was bookmarking this post: I could tag it with any words I wanted - tech, library, cataloging, and so on. Those words describe what this item is about in ways that are primarily relevant to me. If they also happen to make sense for someone else, fine.

Then I would also have to choose one or more verbs, words that describe what I want to do with this item. Do I want to read it, save it, comment on it, disagree with it, build something with it, etc.